AI is all over the business world, whether it’s manufacturing or transportation. Some are working on custom algorithm integrations, while others are giving their legacy infrastructure a fancy AI makeover.

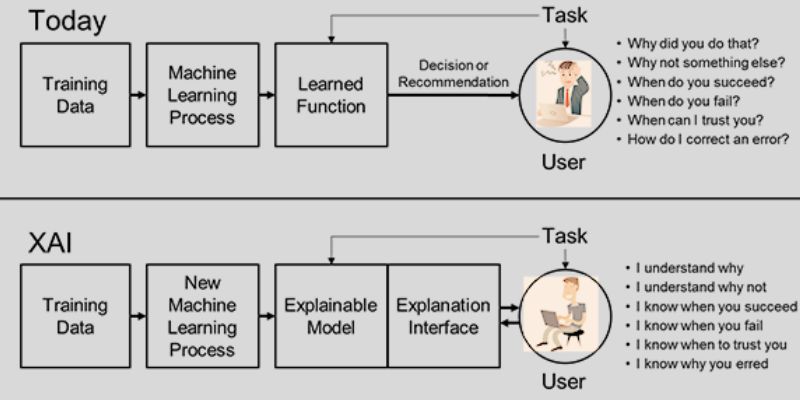

But, with this growing reliance, eyebrows are being raised on the decision-making of AI models. That’s typically because black-box ML models are a tough nut to crack, even for AI engineers. Because of them, AI results are seen as “shady” or, in some cases, inaccurate. So, how can a business ensure that AI outcomes are dependable and efficient? This is where explainable AI or XAI comes into the picture.

But what is explainable AI?

Often coupled with deep learning, it’s an ML model. It’s like a responsible and accountable cousin of the AI family. And it is so because explainable AI can literally disclose how your AI algorithm reached a certain decision. So, if you want your business to become fairer and independent of AI biases, implementing this technology is your solution.

With that sneak peek, let’s decode what is explainable AI and how it can make your business thrive in a world full of doubts.

What is Explainable AI? The Definition

Explainable AI consists of a range of techniques and approaches that enable human users to grasp and have confidence in the outcomes and output produced by machine learning algorithms.

Machine learning models are usually categorized as either white box or black box models. White box models offer more transparency and produce results that are easier for both users and developers to understand. On the other hand, black box models generate AI decisions or predictions that are extremely difficult to elucidate, even for AI experts.

Explainable AI (XAI) steps in to shed light on how an AI system reaches its decisions. It does this by revealing:

- The strengths and weaknesses of the program.

- The specific criteria the program employs when making a decision.

- The reasons behind why the program chooses a particular decision over other options.

- How much trust is appropriate for different types of decisions.

- The kinds of errors the program is susceptible to.

- Strategies for rectifying these errors.

Thus, when you ask what is explainable AI, you’re inquiring about the phenomenon that helps uncover the clues and reasoning behind AI decisions. It’s not just about making AI more accurate; it’s also about ensuring it’s fair and free from biases. So, the best part about seeking software or enterprise solutions based on explainable AI is that you’ll always know what’s happening and how.

The Principles of Explainable AI

The National Institute of Standards and Technology (NIST) has laid out four vital principles for explainable AI. These principles will help you understand what is explainable AI holistically.

Explanation

Explanation

In today’s time, AI is not only about delivering results for businesses. It is also becoming more about clarifying how those results were reached. That’s why NIST defines AI explainability as the ability to provide evidence, support, or reasoning behind an AI system’s decisions or processes. But here’s the catch: explainable AI won’t work as one-size-fits-all. The explanations for outcomes will be detailed, and for that to happen, factors like the context and end user of your particular AI system will be taken into account.

Meaningful

Meaningful

The explanation you offer should also make sense to the people you’re targeting. For instance, if you have an AI image generation tool, you must know how the algorithm is showing those particular results to your users.

But here’s the tricky part: if your user base spans a wide spectrum of knowledge and skills, your AI system should be versatile. It should dish out explanations that cater to the varied needs and levels of expertise within your user pool. Thus, the answer to what is explainable AI also directs to an AI system that is highly user-friendly and adaptable.

Accuracy

Accuracy

Now, this principle is the key reason why explainable AI is much in the hype. So, if you’re working with explainable AI, your programs should tick a few boxes. For example, while giving a certain outcome, they should reflect that your system’s process is:

- Clear

- Accurate

- Correct

Knowledge Limits

Knowledge Limits

Your AI system should stick to what it knows best. Or, in other words, which are its “knowledge limits.” This means it should operate only within the specific conditions it was created for.

So, when you implement explainable AI, your system should only do its thing when it’s pretty sure about the results it’s giving. That’s the confidence factor at play. To boost trust and avoid any sneaky or risky outcomes, it’s a smart move to declare a system limit. This way, explainable AI will be your business’s safety net, protecting your solutions from any outcomes that could be misleading, dangerous, or unfair.

Why is Explainable AI Important for Your Business?

Mckinsey states, “Organizations that establish digital trust among consumers through practices such as making AI explainable are more likely to see their annual revenue and EBIT grow at rates of 10 percent or more.”

AI-based business decisions can have far-reaching consequences, affecting individual rights, human safety, and essential business operations. However, the inner workings of AI models can often seem like a well-guarded secret, leaving users and stakeholders in the dark. That’s why exploring why is explainable AI important takes center stage.

First and foremost, it’s About Trust.

When businesses deploy AI systems, they want to have confidence in the results these systems produce. Thus, you need to understand how your AI models arrive at their conclusions, what data they use, and whether they can rely on the outcomes. Explainability is the key to building trust.

It’s Also About Complexity.

AI models are becoming increasingly complex for humans to understand, thanks to techniques like deep learning and neural networks. This accentuates the need for explainability. As a business, you’d want to learn how your AI-based products or services work. That’s where explainability comes into play. With explainable AI, you’d have techniques that help experts and non-experts alike understand and interpret the outcomes your AI systems generate. This is a great business advantage as decoding the outcomes will enable you to meet the demands of customers, employees, and regulators much more efficiently.

Transparency is Crucial with AI’s Expanding Presence in Everyday Life.

Your users are well aware of their rights in the age of the internet. They are now conscious about how AI is making decisions that impact them. Some harmful algorithms are so strong that they linger even after corrections. They leave behind what experts call “algorithmic imprints” on society and people’s lives. Explainable AI addresses can address such problems empower users to assess whether AI systems are operating as intended.

Government Regulations

As governments worldwide work to regulate AI, explainability is likely to become even more critical. Regulations like the AI Bill of Rights in the United States underscore the importance of protecting personal data and ensuring responsible AI use. Here, explainable AI can help you mitigate compliance, legal, and security risks associated with their AI models.

How Does Explainable AI Work?

Explainable AI is a set of techniques and approaches designed to make the decision-making processes of artificial intelligence systems more transparent and understandable to humans. It aims to bridge the gap between the “black box” nature of many AI algorithms and the need for users to trust, interpret, and validate AI-generated outcomes.

XAI Methods Typically Involve:

Interpretable Models

Using simpler, transparent algorithms like decision trees or linear regression instead of complex deep neural networks makes it easier to understand how the AI arrives at its conclusions.

Feature Importance

Identifying and highlighting the most influential factors that contribute to AI predictions, helping users grasp which inputs matter most.

Visualization

Presenting AI-generated insights and reasoning through visual representations, such as heatmaps or graphs, to aid comprehension.

Natural Language Explanations

Providing plain-language explanations of AI decisions so users can grasp the rationale behind predictions or recommendations.

Counterfactual Explanations

Showing how changing input variables could alter AI outcomes, enhancing understanding of system behavior.

The Benefits of Explainable AI

If you’re someone struggling to instill confidence in your AI-based business, then explainable AI is for you. Indeed, a day might come when every business will consider it for ethical operations. But being one of the early adopters will make you earn brownie points. Moreover, with these techniques, custom enterprise software development will become extremely clear. Some of the key benefits you will experience with explainable AI are as follows:

Boosting Productivity

Understandable techniques quickly spot errors, aiding MLOps teams supervising AI systems. So, one of the key explainable AI benefits is that it enables you to realize key model features. These features help verify broad patterns, vital for future predictions, preventing reliance on odd historical data.

Fostering Trust and Adoption

Explainability is pivotal for winning the trust of customers, regulators, and the general public. If users don’t grasp the recommendations’ basis, even advanced AI gathers unreliable content. Understanding why AI suggests actions builds confidence as professionals comprehend how a certain decision is reached.

Uncovering Valuable Strategies

Business intricacies may sometimes hide, leaving you puzzled. Unraveling how they work can sometimes help you beyond the actual work. For instance, great customer satisfaction can help your business grow. But understanding what led to that satisfaction can help you even more.

Ensuring AI’s Business Value

Explainability will always encourage your chosen AI development services provider to stay transparent. This way, you’ll be able to ensure objectives are met and prevent translation mishaps. Moreover, this will make you confident that your AI-based software delivers the expected value. That’s not all. In cases where you seek expertise, you can always turn back to them to elaborate on aspects you don’t get.

Managing Risks Effectively

Explainability aids in mitigating risks. AI violating ethical norms can trigger intense scrutiny. In such a scenario, technical explanations that confirm compliance with laws, policies, and values, can help. Additionally, these explanations can also stay aligned with your company’s goals and standards.

How to Approach Explainable AI Development Services

After learning the benefits of explainable AI, you’d want to get started right away. But wait before you jump right in without knowing the process. So, here’s a step-by-step guide that will allow you to integrate explainability at its best in your AI system.

Firstly, Educate Yourself.

Grasp the basics of AI and its applications. Understand why explainability matters—it’s the key to building trust.

Next, Define Your Goals.

Clearly outline what you want to achieve with Explainable AI. Is it for predictive analysis, customer service, or something else? Clarity is your best friend here.

Now, Choose the Right Team.

Collaborate with experts who not only understand AI but also comprehend your industry’s nuances. Their experience will shape the project profoundly.

Data, Data, Data.

Gather high-quality data relevant to your goals. Remember, the output is only as good as the input. Clean, diverse data is the foundation of any successful AI model.

Transparency is Crucial.

Ensure the development process is transparent. Regular updates and clear communication with the developers are vital. Don’t hesitate to ask questions—understanding the process is your right.

Lastly, Test and Refine.

Rigorous testing is a must. Identify patterns, check for biases, and refine the model until it aligns perfectly with your goals.

Approaching explainable AI development services isn’t just about technology; it’s a collaborative journey where your understanding and engagement matter profoundly. Stay curious, ask questions, and soon, you’ll find yourself not just navigating but mastering the realm of Explainable AI Development Services!

Why Choose Matellio as your Explainable AI Development Services Provider?

Now that you know the process, it’s time to get set and go! Matellio has unrivaled expertise in developing cutting-edge AI solutions and tools. Why trust us to integrate explainability in your solution? Here are some of the key reasons why we stand out among the AI industry experts-

Expertise Beyond Comparison

Matellio boasts a team of AI professionals who have unmatched expertise. Their deep understanding of AI nuances ensures that your project is not just another task but a craft perfected with skills and knowledge.

Client-Centric Approach

We believe in the power of collaboration. Our experts don’t just develop solutions; they build relationships. Your goals become our mission. The team’s approach is centered around your needs, ensuring a tailor-made solution that fits like a glove.

Transparency in Every Step

Matellio takes pride in transparent processes. No jargon, no hidden agendas. You are an integral part of the development journey. Regular updates, clear communication, and detailed explanations are our forte.

Data Integrity and Security

Our experts understand the value of your data. With stringent security measures and a focus on data integrity, we ensure that your information is not just safe but utilized ethically to craft powerful, explainable AI models.

Customization at Its Core

Matellio doesn’t just keep up with trends; we set them. Our customized solutions are not just about the present; they are future-ready. With us, you are not just getting a service; you are investing in innovation that stands the test of time.

Excited to get on board for explainable AI development services? You can seek technology consulting services to explore more about how explainable AI models will suit your business. If you want to begin the journey right away, fill out this form and witness Matellio’s magic of building timeless next-gen solutions.

Explanation

Explanation Meaningful

Meaningful Accuracy

Accuracy Knowledge Limits

Knowledge Limits